The UX-Lab foundation

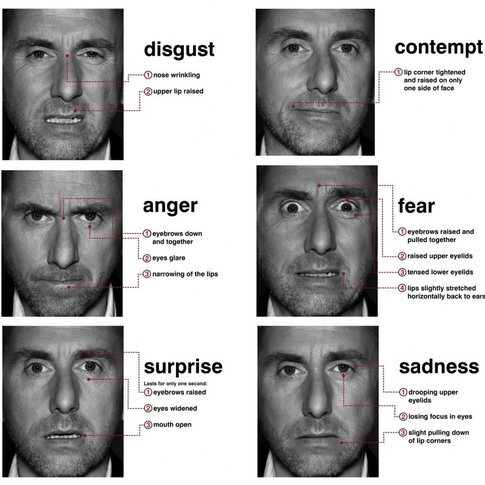

During our research on a test room with the UX-Lab foundation, I became interested in the Affdex API which makes it possible to measure the emotions, expressions and appearance of a subject from a photo or video. This technology is based on the most widespread method of describing “observable facial movements”, the FACS (Facial Action Coding System), created by Paul Eckman in the 70s and regularly brought up to date since then. This psychologist initially determined 6 universal expressions (hate, anger, disgust, fear, joy, sadness and surprise) and studied the microexpressions in detail.

Resource | Paul Ekman's Facial Action Coding System

During user tests, it is common to observe a difference between what the tester says and the expression on his face. Some users give positive feedback, explain that they did not encounter any particular difficulty, while the video shows a feeling of stress or worry. It is not always easy to identify a specific emotion and that is why certain tools can be useful. Facial emotions are the most studied of the emotions. A specific muscle pattern corresponds to an emotion.

The Afdex API

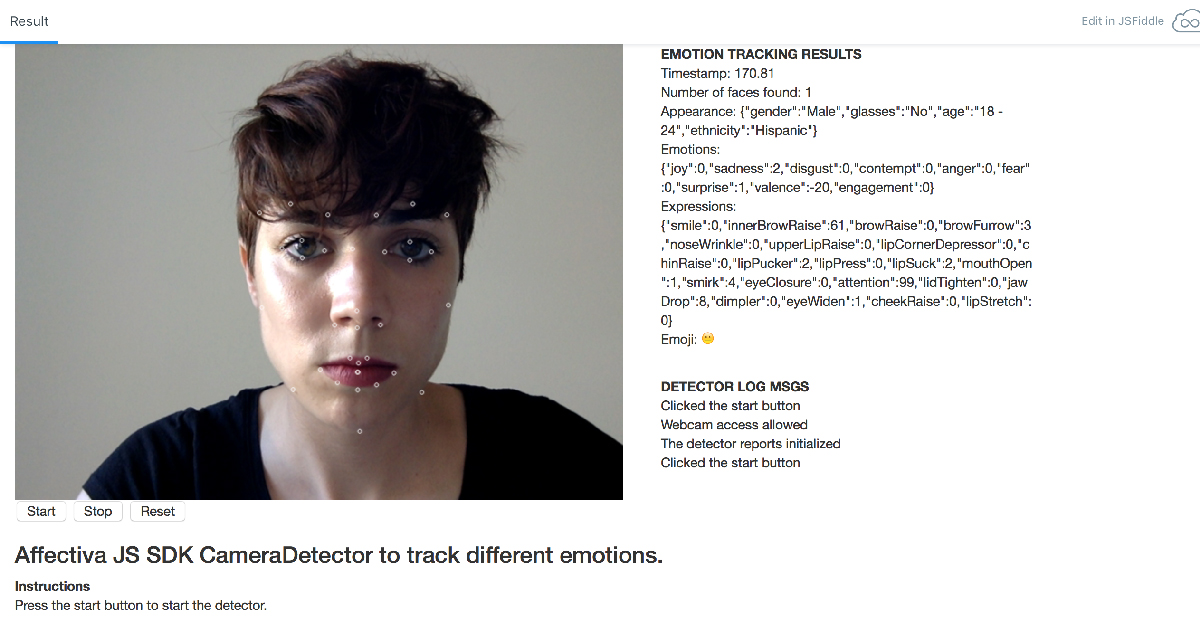

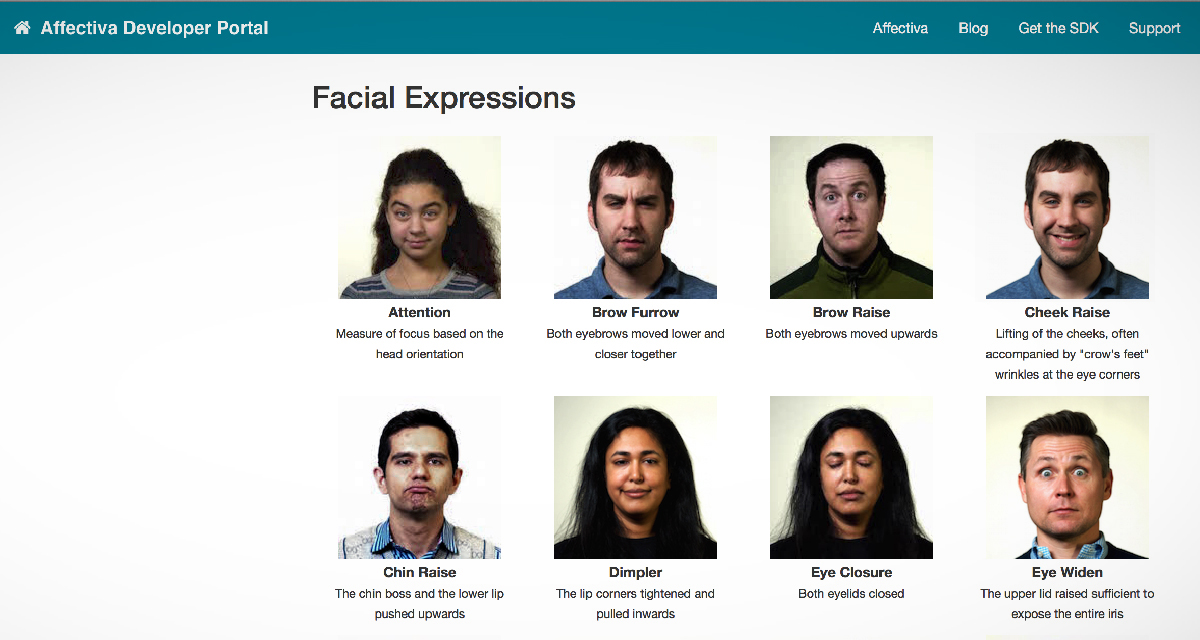

This tool appears to be very interesting in the context of a user test room, when a need for precise analyzes emerges. We may indeed need to see at what point in the user journey, the tester stumbles, questions himself, loses his concentration. The Affdex API provides us with this information in its raw state through numerical indicators. We thus analyzed 21 expressions, each associated with a particular movement of the mouth, nose, eyebrows and face.

Here is the example presented by Affdex with the detection points visible:

Resource | JSFiddle Emotion tracking by AFFDEX

Affdex lists the face expressions it uses

Resource | Facial Expressions

The prototype

The raw data between 0 and 100 returned by Affdex is difficult to use as it is. So I used canvasjs to create on the fly a graph transcribing each expression.

The prototype produced thus allows both:

– to record video feedback from the computer webcam

– archive the expression graph corresponding to the recorded video

The code is available on github | https://github.com/francasix/user-test

Use and Extension

This combination of tools gives a first glimpse of what can be achieved in terms of user analysis, but the idea is to push the detail and the possibilities. Do not stop at the simple goal of a prototype. Imagine Affdex as a starting point from which ambitious ideas flow. I'm already thinking about the possibility of automatically sorting the graphs of a user test by trend and thus comparing them more easily or even creating an analysis algorithm that would bring out a feeling or an expression at a given moment thanks to the study in parallel of the different API data.

Pauline Gaudet-Chardonnet / UX-Scientist – UX-Lab Foundation @UXRepublic

UX/UI ECO-DESIGN # Paris

SMILE Paris

163 quay of Doctor Dervaux 92600 Asnières-sur-Seine

DESIGN THINKING: CREATING INNOVATION # Belgium

UX-REPUBLIC Belgium

12 avenue de Broqueville - 1150 Woluwe-Saint-Pierre

MANAGING AND MEASURING UX # Paris

SMILE Paris

163 quay of Doctor Dervaux 92600 Asnières-sur-Seine

DESIGN SPRINT: INITIATION & FACILITATION # Paris

SMILE Paris

163 quay of Doctor Dervaux 92600 Asnières-sur-Seine

UX-DESIGN: THE FUNDAMENTALS # Belgium

UX-REPUBLIC Belgium

12 avenue de Broqueville - 1150 Woluwe-Saint-Pierre

GOOGLE ANALYTICS 4 #Paris

SMILE Paris

163 quay of Doctor Dervaux 92600 Asnières-sur-Seine

ACCESSIBLE UX/UI DESIGN # Belgium

UX-REPUBLIC Belgium

12 avenue de Broqueville - 1150 Woluwe-Saint-Pierre

EXPERIENCE MAPPING # Paris

SMILE Paris

163 quay of Doctor Dervaux 92600 Asnières-sur-Seine