So no, ErgonUX is not a cosmetic brand but rather the little name I have

given to the methodology that I deployed during a mission relating to the design of a

autonomous vehicle. The objective of this article is to present this methodology to you, but

we will not present the detailed results of this study here.

At the heart of industrial and political concerns, the development of the vehicle

autonomous is a major challenge, both economically and for safety

roads but also the development of sustainable mobility. Since the launch of the

Google Car in 2010, which gave great impetus to this innovation, manufacturers

automobiles, as well as many equipment manufacturers and research institutes, are working on this theme.

#1 But what is an autonomous vehicle?

An autonomous vehicle is a vehicle where all or part of the driving is delegated to the

system. This type of vehicle represents a world turning point in the automotive field

and highlights an upheaval in our driving habits

today.

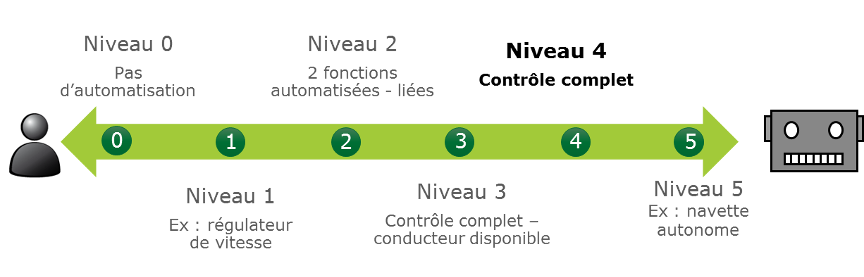

The Society of Automotive Engineers (SAE, 2016) defines 6 levels of automation:

- Level 0 : No automation:

The driver should monitor his driving environment and perform the complete driving task.

- Level 1 : Driver assistance:

The system supports a function of the driving task. The driver is responsible for all remaining aspects of the driving task. The system is not able to detect the limits of all of its capabilities, which remains the responsibility of the driver. The latter must therefore monitor the proper functioning of the system at all times and regain control of the vehicle immediately if necessary. (Example: Lane keeping or cruise control).

- Level 2 : Partial automation:

The system supports one or more functions of the driving task simultaneously. The driver must continuously monitor the system and its driving environment and is responsible for performing all remaining aspects of the driving task. Non-driving activities are not permitted. When the system identifies its limits, the driver should be able to regain control of the vehicle immediately. (Example: lane keeping and cruise control).

- Level 3 : Conditional automation:

The system takes care of all aspects of driving (longitudinal and lateral control). The driver does not have to constantly monitor the system. Activities not related to driving are permitted on a limited basis. The system identifies the limit of its performance, however it is not capable of bringing the vehicle back to a state of minimum risk in all situations. Consequently, the driver must be able to regain control of the vehicle within a determined period of time.

- Level 4 : High automation:

The system takes care of all aspects of driving (longitudinal and lateral control). The driver does not have to constantly monitor the system. Non-driving activities are permitted throughout the autonomous driving phase. The system identifies the limit of its performance and can automatically cope with any situation. At the end of this autonomous driving phase, the driver must be able to regain control of the vehicle.

- Level 5 : Full automation:

The system takes care of all aspects of driving (longitudinal and lateral control). Driver action is not required at any time. The system identifies the limit of its performance and can automatically deal with any situation arising during a complete journey (for example: autonomous shuttle or Google Car).

What if Santa Claus had an autonomous sleigh?!

Farewell to fatigue for the reindeer who could then enjoy this evening of sharing with the other members of their tribe.

Route (even more) optimized; Santa could then spend some time with the children. He would also finish his round of the chimneys more quickly and less tired. This would allow him to join Mrs. Claus and her faithful companions the elves to arrive in time to eat the famous Yule log.

No more risk of collision with shooting stars; no more risk of deterioration or falling of the sled of gifts, so no more children disappointed not to have received the gift they were waiting for so much.

A smooth Christmas thanks to Sled 5.0.

In our study, we sought to document the level 4 autonomous driving activity. The advantages of this type of level of automation, put forward, are safety (reduction in the number of accidents), traffic (reduction of traffic jams that we all hate) as well as the possibility of optimizing driving time to carry out other activities (reading a newspaper or watching a film for example).

However, the introduction of this type of vehicle still raises many questions.

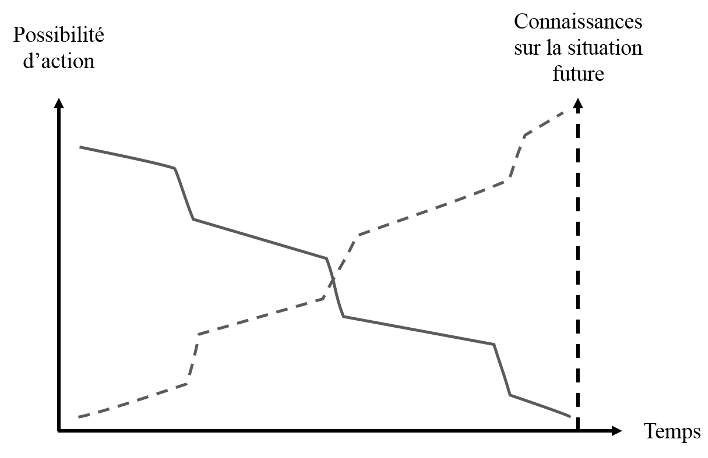

The autonomous system, although taking charge of all the driving functions, can, at any time, ask the driver to regain control of the vehicle.

The driver is therefore no longer responsible for the driving or the safety of the vehicle. It therefore has no obligation to monitor the environment, nor the behavior of the system..

The question of the driver's ability to regain control of the vehicle after being "disconnected" from driving is then completely legitimate.

#2 A quick overview of the effects of automation

In 1983, Bainbridge already underlined the irony that automation provokes. Indeed, the simplest tasks are delegated to the machine and the human operator must then perform the most complex tasks. Freyssenet (1984) completes these statements according to which automation can make the difficult dimensions of the task even more difficult; and that it negatively influences the development of competence, creates a decrease in attention to the exceptional event (requiring a quick decision) as well as an anxiety of waiting for the incident.

Furthermore, various studies show that driving performance after an automated driving phase decreases as the level of automation increases and when the driver carries out life activities on board.

This can then call into question the benefits of security and the ability to perform other activities that one would expect from automation.

#3 The design paradox

As we said, we were looking to document level 4 autonomous driving activity. But this type of vehicle is not yet commercialized and we face several obstacles:

Indeed, from a technical point of view, the prototypes, although being more and more advanced, remain limited and require constant supervision. So it's not like level 4 anymore.

From a legal point of view, even if level 4 autonomous vehicles were marketed, according to the Vienna convention, drivers must maintain control of the vehicle and remain responsible for the vehicle during autonomous driving; so they have to watch the road and have their hands on the wheel. So it is no longer a level 4 autonomous vehicle but rather level 3.

As for the prototypes, they can only be piloted by experts.

It is therefore impossible to observe, to date, the uses of this type of device in real situations, nor to understand the activity of delegated driving, given that the latter does not yet exist. This is called the design paradox (Theureau and Pinsky, 1984; Daniellou, 2004).

Our objective was then to find a way to overcome this paradox in order to anticipate the uses of future users as soon as possible and to integrate them into the different design stages of this type of vehicle.

To do this, we studied so-called reference situations of assisted driving; and simulated simulator and track situations.

#4 Driving activities studied

Three types of driving situations were analysed:

- Driving situations with driving assistance system :

The analysis concerns the activity of three drivers with a vehicle equipped with different driving aid systems. We observed the realization of two journeys with each driver and we sought to inform their activity related to the use of their driver assistance system.

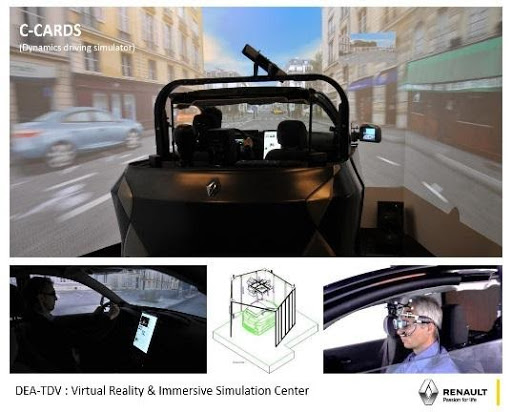

- An autonomous driving situation on a simulator :

The analysis concerns the activity of twenty-nine drivers who have been in a delegated driving situation on Renault's C-CARDS simulator. The drivers made three 15-minute journeys on the motorway limited to 110 km/h. Of these 15 minutes, the drivers activated autonomous driving for about 10 minutes, at the end of which the system asked them to regain control of the vehicle.

- A simulated autonomous driving situation on the track :

This analysis relates to the activity of nine drivers. To do this, we have designed a Wizard of Oz vehicle, which can be driven in the classic driver's position (from the steering wheel and pedals) but also by a co-driver, from a joystick hidden from the driver. When the driver thought to activate autonomous driving, the co-driver then took control of the vehicle (via the joystick). The drivers made three 15-minute journeys on the Satory road track, which we had limited to 50 km/h (for safety reasons). Of these 15 minutes, the drivers activated autonomous driving for approximately 12 minutes, at the end of which the system asks them to regain control of the vehicle.

#5 Data collection methodology

In our studies, we had two types of data, extrinsic data (or “3rd person” data) and intrinsic data (or “1st person” data).

The so-called extrinsic data are the data of the subjects, recorded by the observer. They do not take into account the experience of the subject's situation. It is all the non-subjective data collected on the subject, the observable. For example, reaction times, biomechanical data, frequency measurements, etc.

So-called intrinsic data is data based on the “acting subject’s point of view” (Vermersch, 2010). These data are not of the same order; they are difficult to quantify but inform us about the unobservable parts of the subject's activity. It is then a question of accessing the so-called "lived" experiences of users and therefore their own point of view on the activity carried out (Cahour and Créno, 2017)

The objective is to relate the extrinsic point of view on the activity, that of the researcher who observes the subject acting and the intrinsic point of view on the activity, that of the subject who acts in a situational context. that makes sense to him. This is called method triangulation (Flick, 1992).

In our research, we put both types of data at the center of our studies to better understand the driver's activity in a situation of autonomous driving and recovery.

The data collection methodology was not quite the same for the three situations.

For the reference situations, we were in the vehicle with the driver. During the journeys, we asked the driver to verbalize his activity (as far as possible).

A paper/pencil statement also allowed us to pragmatically collect contextual elements to complete the recordings.

Despite tight camera angles, we also filmed and photographed some moments of his activity and the artifacts used.

The methodology for collecting data on the simulator and on the Wizard of Oz vehicle was quite similar.

Both vehicles were equipped with numerous sensors that allowed us to record multiple data such as vehicle speed, interactions with the steering wheel, buttons, pedals (brake or accelerator), turn signals, presence / absence of hands on the steering wheel (thanks to presence sensors), or even the state of the vehicle (manual driving, autonomous driving available, autonomous driving, request for takeover).

They were also equipped with four synchronized cameras whose filmed content was recorded. On the simulator, the drivers were equipped with a telescope-type eye tracker.

To the left : capture of the recording of the four synchronized cameras. To the right : Photograph of a driver wearing the eye tracker (top) and the retransmission of the video from the eye tracker (bottom).

Finally, for the three studies, we conducted interviews with the drivers after each driving situation.

#6 "KPI"

For assisted driving situations, we noted the time of use of the driving assistance systems, the frequencies and reasons for their activation and deactivation.

For autonomous driving situations on the simulator and with the Wizard of Oz vehicle, we were interested in:

- Autonomous driving activity, in particular through carrying out life activities on board (reading, using the telephone, for example) and its interruptions :

- Time elapsed between activation of the autonomous system and completion of life on board activity (phone use, reading)

- Total onboard life activity completion time compared to autonomous driving time

- Maximum time for carrying out life activities on board without interruption

- Number, duration, frequency and type of interruption in carrying out life activities on board

- The activity of deactivating the autonomous system through the various actions carried out between the request for taking over the system and the actual taking over of the vehicle :

- Time taken to stop carrying out their onboard life activity

- Understanding the sound alert via the HMI

- Retrieval of information from the road environment

- Return to driving position (release hands, put them back on the steering wheel; put your foot back above/on the pedal, reposition yourself in the seat, look at the road)

- Time to deactivate the system

- System deactivation mode

#7 Conclusion

As we have said, the objective of this article was not to present the results of the three studies that we have presented but to highlight the contribution, for the design of the autonomous vehicle, of the, or rather methodologies put in place.

All of these analyzes have indeed enabled us to develop knowledge on the needs and objectives of users in autonomous driving situations and to anticipate the uses of this type of vehicle, in order to propose perspectives of anthropocentric design.

Beyond the improvements in HMIs or the operating principles of the autonomous vehicle, this research will have made it possible to highlight elements that echo the irony of automation that we have already discussed at the beginning of the article.

Indeed, in an autonomous driving situation, many drivers have deactivated the autonomous system, and therefore regained control of the vehicle, before being able to once again become drivers in control of their vehicle.

Some drivers regained control of the vehicle before looking at the road; and almost all drivers have done so without taking the time to look at their mirrors. They therefore regained control of the vehicle without having all the driving information.

“When I regain control, I don't necessarily have all the information about what surrounds me. There is a time to disconnect from what you are doing, to analyze the situation which is completely new, the speed, the situation on the road in relation to others. So I come back gradually while I regained control right away. »

(Excerpts from interviews).

Others regained control of the vehicle before putting their hands back on the wheel or while still holding the object of their life activity on board, not having had time to free themselves from it.

“There is a small moment of hesitation. […] So I have regained control but my hands are not active yet. And it's not normal. In fact, I don't immediately grab the steering wheel because I don't feel like I'm in control of the vehicle. And I see myself having this delay and saying to myself “why don’t you take the wheel in hand”. […] There is a moment when the vehicle is without control in terms of direction. For a short time, no one is in control of the vehicle. »

(Interview excerpts).

Photographs of a driver who regains control of the vehicle without taking the time to put down the magazine he was reading during autonomous driving.

Finally, drivers with a vision correction device (from afar, to drive or near, to carry out their life activity on board during autonomous driving) regained control of the vehicle without taking the time to put or take off their glasses. They therefore regained control of the vehicle without having the correct visual correction.

The photograph opposite shows us a driver who did not take the time to remove his glasses (which he needed in autonomous driving in order to read his messages on his mobile phone) before regaining control of the vehicle. We notice that he can still look at the road without his glasses by lowering his head and looking over them.

This situation could not be remedied for drivers who took off their glasses when carrying out their on-board life activity and who regained control before being able to put them back on.

One can then wonder about the advantages in terms of road safety put forward, with regard to the use of this type of vehicle. Indeed, it is likely that with an efficient system, the number of accidents, in autonomous driving, will decrease. But isn't this likely to increase due to accidents created when the vehicle is taken over?

Besides, doesn't it pose some ethical problems to put the driver in a position that he wouldn't be able to handle properly? Does this type of vehicle give drivers the means to be “good” drivers? Or on the contrary, does it not reduce their ability to act as a driver? Will drivers agree to buy a vehicle that could put them, when they take control, in a more dangerous situation than without automation?

“I go from totally disconnected to having to be an operational driver. […] I am not given all the balls to become operational again. »

(Excerpts from interviews).

These questions therefore also arise for Sleigh 5.0: will it allow Santa Claus to be as efficient as with his reindeer? Being completely disconnected from his tour of the chimneys, won't he forget a few houses? Much to the dismay of the children...

See you tomorrow for new surprises on our UX-Republic Advent calendar!

Celine POISSON, UX Researcher @UX-Republic – Doctor in Ergonomics

[actionbox color=”default” title=”DESIGN SPRINT training -20%” description=”-20% discount on the December DESIGN SPRINT training session by giving the code 12-UX-DEC when you register! There is still time…” btn_label=”Our training” btn_link=”http://training.ux-republic.com” btn_color=”primary” btn_size=”big” btn_icon=”star” btn_external=”1″]